Our upcoming paper at PAM : “NICBench”

We’re pleased to announce our upcoming paper “What you need to know about (Smart) Network Interface Cards” by G. Katsikas, T. Barbette, M. Chiesa, D. Kostic and G. Maguire Jr. is accepted at PAM’21!

We can’t publish the preprint yet, but we’ll do as soon as possible 🙂

Network interface cards (NICs) are fundamental components of modern high-speed networked systems, supporting multi-100 Gbps speeds and increasing programmability. Offloading computation from a server’s CPU to a NIC frees a substantial amount of the server’s CPU resources, making NICs key to offer competitive cloud services. Therefore, understanding the performance benefits and limitations of offloading a networking application to a NIC is of paramount importance. In this paper, we measure the performance of four different NICs from one of the largest NIC vendors worldwide, supporting 100 Gbps and 200 Gbps. We show that while today’s NICs can easily support multi-hundred-gigabit throughputs, performing frequent update operations of a NIC’s packet classifier — as network address translators (NATs) and load balancers would do for each incoming connection — results in a dramatic throughput reduction of up to 70 Gbps or complete denial of service. Our conclusion is that all tested NICs cannot support high-speed networking applications that require keeping track of a large number of frequently arriving incoming connections. Furthermore, we show a variety of counter-intuitive performance artefacts including the performance impact of using multiple tables to classify flows of packets.

PAM will be held in late March, so stay tuned!

FOSDEM’21: FastClick and beyond…

Early February we presented a talk at FOSDEM, a huge Open-Source gathering with my colleague Alireza Farshin. The video is now released!

In the talk we present FastClick with a short demo, do a round of existing alternative modular framework (VPP and BESS mainly) and then discuss the future of software dataplanes, which we believe our recent work PacketMill starts to address.

We mainly show how FastClick is still really up-to-date with competition and goes beyond sota with PacketMill’s enhancements. We also re-did an experiment at 100G showing how FastClick now improves Click by more than 30x in a forwarding configuration. This is because we continued to maintain FastClick since nearly 6 years now and we do consider pull requests, and integrate recent research while good old Click itself is sadly stalling since a decade now. I will do a blog post about the state of FastClick in the next weeks.

I also bought the www.fastclick.dev domain to start a little showcase website. For now it redirects to GitHub. Feel free to help 🙂

A poster of our latest work, CrossRSS, a Stateless CPU-Aware Datacenter Load-Balancer

Today we will present a poster of our latest work, at CoNEXT’20 : CrossRSS! CrossRSS is a load-balancer that spreads the load uniformly even inside the servers. It uses knowledge of the dispatching done inside the servers, RSS, to purposely select less-loaded cores without any server modification, or inter-core communications on the server. Learn more by watching the short video!

The poster session will be held on the 4th of December, 2:30 CET on the Mozilla VR Hub

Our latest paper “Cheetah”, a load balancer that guarantees per-connection-consistency

Cheetah is a new load balancer that solves the challenge of remembering which connection was sent to which server without the traditional trade off between uniform load balancing and efficiency. Cheetah is up to 5 times faster than stateful load balancers and can support advanced balancing mechanisms that reduce the flow completion time by a factor of 2 to 3x without breaking connections, even while adding and removing servers.

More information at https://www.usenix.org/conference/nsdi20/presentation/barbette.

Our new paper RSS++: load and state-aware receive side scaling

I’m delighted to announce the publication of our latest paper titled “RSS++: load and state-aware receive side scaling” at CoNEXT’19.

Abstract

While the current literature typically focuses on load-balancing among multiple servers, in this paper, we demonstrate the importance of load-balancing within a single machine (potentially with hundreds of CPU cores). In this context, we propose a new load-balancing technique (RSS++) that dynamically modifies the receive side scaling (RSS) indirection table to spread the load across the CPU cores in a more optimal way. RSS++ incurs up to 14x lower 95th percentile tail latency and orders of magnitude fewer packet drops compared to RSS under high CPU utilization. RSS++ allows higher CPU utilization and dynamic scaling of the number of allocated CPU cores to accommodate the input load while avoiding the typical 25% over-provisioning.

RSS++ has been implemented for both (i) DPDK and (ii) the Linux kernel. Additionally, we implement a new state migration technique which facilitates sharding and reduces contention between CPU cores accessing per-flow data. RSS++ keeps the flow-state by groups that can be migrated at once, leading to a 20% higher efficiency than a state of the art shared flow table.

Our latest paper “Metron: NFV Service Chains at the True Speed of the Underlying Hardware” has been accepted at NSDI 2018 !

Abstract

In this paper we present Metron, a Network Functions Virtualization (NFV) platform that achieves high resource utilization by jointly exploiting the underlying network and commodity servers’ resources. This synergy allows Metron to: (i) offload part of the packet processing logic to the network, (ii) use smart tagging to setup and exploit the affinity of traffic classes, and (iii) use tag-based hardware dispatching to carry out the remaining packet processing at the speed of the servers’ fastest cache(s), with zero intercore communication. Metron also introduces a novel resource allocation scheme that minimizes the resource allocation overhead for large-scale NFV deployments. With commodity hardware assistance, Metron deeply inspects traffic at 40 Gbps and realizes stateful network functions at the speed of a 100 GbE network card on a single server. Metron has 2.75-6.5x better efficiency than OpenBox, a state of the art NFV system, while ensuring key requirements such as elasticity, fine-grained load balancing, and flexible traffic steering.

Do HUAWEI CloudEngine switches support OpenFlow?

No, no and no.

Despite what the ONF says (https://www.opennetworking.org/product-registry/) it is not. Huawei’s OpenFlow implementation is actually broken. The very first HELLO OpenFlow message is broken. It reports support for OpenFlow 1.4 in the HELLO message, but the rest of the message is absolutely not structured as defined in the standard.

After contacting all parties, it is clear that nobody will move about that, especially HUAWEI which wants to sell the Agile controller for a high price. It would appear that an old firmware, announcing OpenFlow 1.3 was compliant at the certification time but only if using an old software compliant with OpenFlow 1.3.0 and not newer, as starting with 1.3.1 after that the message is broken too.

Funny, I recently bought a HUAWEI smartphone that had trouble with SmartWatches. The seller told me that most smartwatches worked with every phones except Huawei ones, because their bluetooth implementation is not compliant. Seems to be a habit…

Use tile-eclipse to launch a software on a remote Tilera TileEncoreGX36 through SSH

Here is the set-up to make it work :

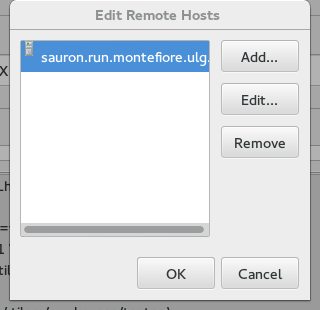

First find the listening monitor port. On this picture it is 34531.

We’ll have to set up a reverse ssh forwarding for the tile-monitor to connect to our tile-eclipse instead of trying to connect to some local listener on the remote host.

Run :

ssh -R 34531:localhost:34531 sauron.run.montefiore.ulg.ac.be

Where in our case, the port 34531 is the one you found in tile-eclipse, and sauron.run.montefiore.ulg.ac.be is our host where the tile is connected.

Each time you re-run tile-eclipse you’ll have to redo that part as the port will change.

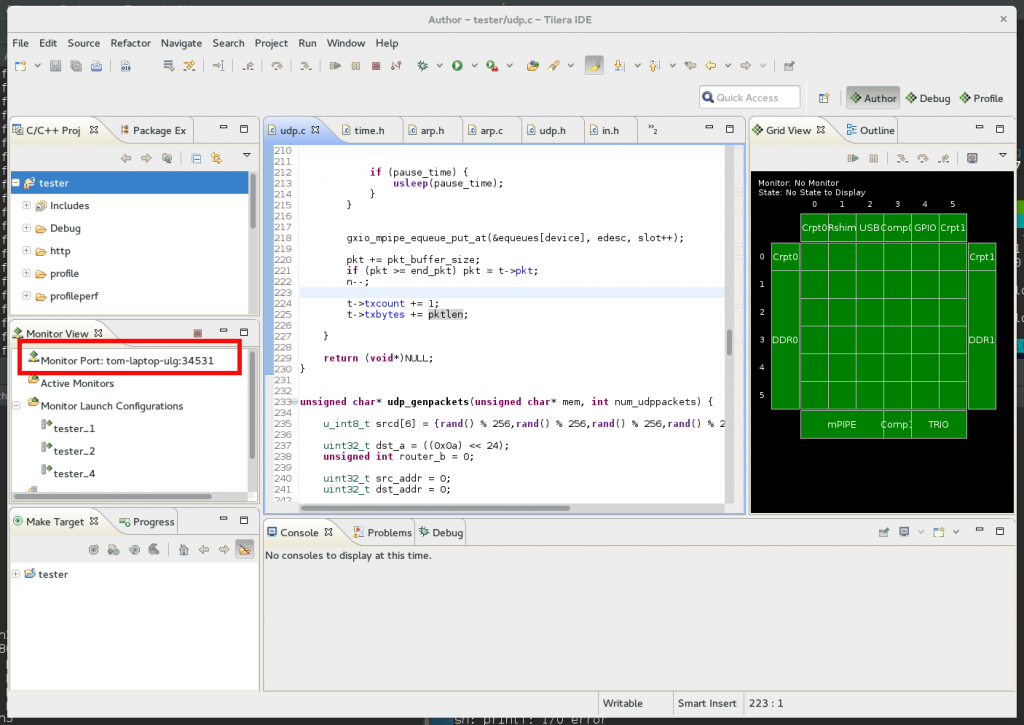

Then only once you have to set up your run configuration.

In the hardware part, correctly set up the hardware :

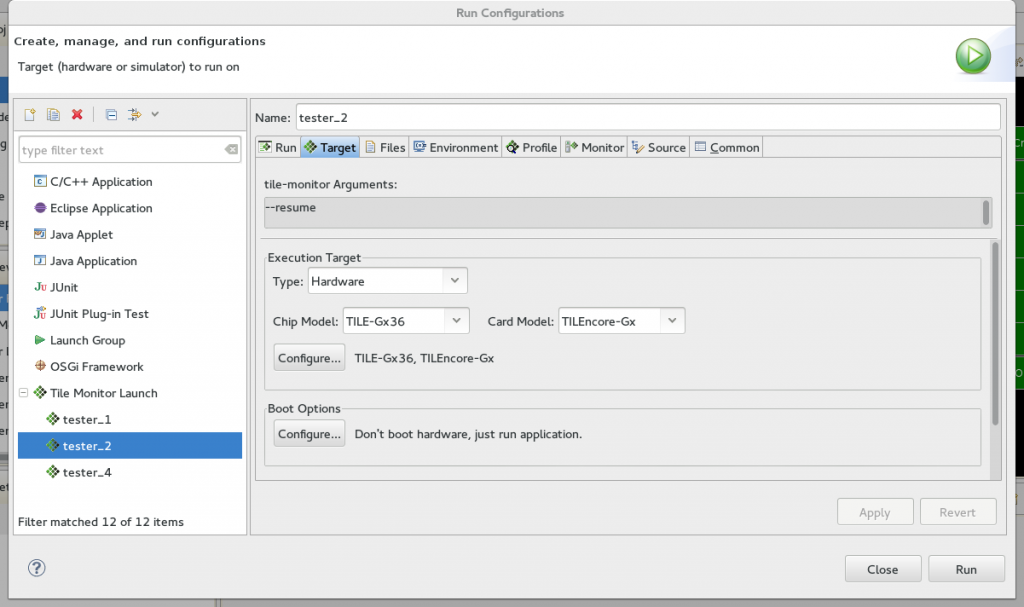

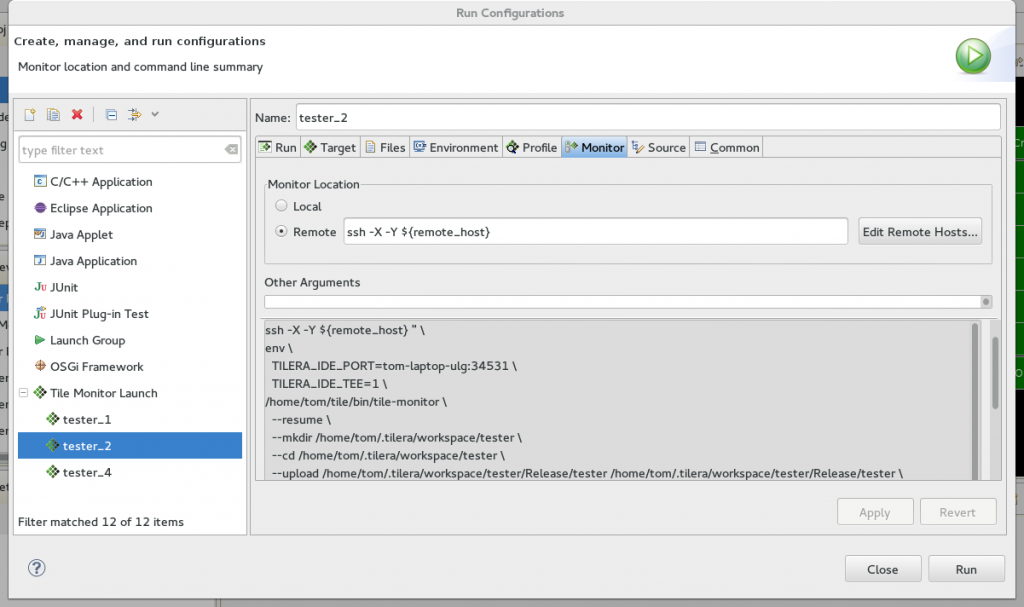

And in the monitor part, set remote as given in this picture and click on manage hosts :

I never found a better way to set it up (not using myself a ssh -R reverse forwarding), it should be possible to set it up automatically.

“Fast Userspace Packet Processing” paper available

Our paper “Fast Userspace Packet Processing” has been published at ANCS ’15 and is available in Open Access at http://hdl.handle.net/2268/181954